White Paper

Intel and Cadence Collaboration on UCIe: Demonstration of Simulation Interoperability

A UCIe Case Study

The Universal Chiplet Interconnect Express™ (UCIe™) 1.0 specification was announced in early 2022. A new updated UCIe 1.1 specification was released on August 8, 2023. The standardized open chiplet standard allows for heterogeneous integration of die-to-die link interconnects within the same package. The UCIe standard allows for advanced package and standard package options to tradeoff cost, bandwidth density, power, and performance. The standard also incorporates layered protocols, including the physical layer, the die-to-die adapter layer, and protocol layers. The rapid adoption of a standardized chiplet ecosystem targets the disaggregation of SoCs with advancement to a system-in-package (SiP). UCIe KPI metrics to meet demands for high bandwidth, low power, and low latency applications across emerging market segments such as artificial intelligence, machine learning, hyperscale, edge and cloud compute, and more.

In this paper, we discuss the history of Cadence’s simulation interoperability between an Intel host and a Cadence IP, which has been key to deploying new and emerging technologies such as UCIe and CXL.

Overview

Introduction

Emerging new standards often present unique challenges and limited opportunities for interoperability as the ecosystem continues to evolve. The new arrival of the UCIe standard introduced a multi-layer standard protocol of communication, allowing on-package integration of chiplets from different foundries and process nodes. UCIe is expected to enable power-efficient and low-latency chiplet solutions as heterogeneous disaggregation of SoCs becomes mainstream to overcome the challenges of Moore’s Law. To keep up with the rapid pace of the expansion of the chiplet ecosystem, there is a drive to set up the standard ecosystem to enable design IP, verification IP, and testing practices for compliance.

Cadence and Intel have a history of collaborating on simulation-based interop of emerging standards as outlined in the CXL Case Study Whitepaper “Interop Shift Left: Using Pre-Silicon Simulation for Emerging Standards”, published in 2021. The CXL whitepaper described the RTL simulation-based interop of the CXL1.1/2.0 standards performed using the Cadence CXL Endpoint IP and the Intel Root Port CXL Host IP. This successful RTL-based simulation interop was initiated originally for CXL1.1, migrated to CXL2.0, and then expanded in scope to PCIe5.0-IDE (Integrity and Data Encryption) interop.

In 2023, Cadence and Intel expanded this collaboration to focus on simulation interop of the UCIe solutions implemented by both teams.

UCIe Compliance Challenges

The UCIe specification for compliance and testing continued to be updated and refined as the standard evolved from revision 1.0 to revision 1.1. UCIe compliance topics include the electrical, mechanical, die-to-die adapter, protocol layer, physical layer, and integration of the golden die link to the vendor DUT. The PHY electrical and adapter compliances include the die-to-die high-speed interface as well as the RDI and FDI interface. The mechanical compliance of the channel is tightly coupled with the type of reference package used for integration.

Electrical compliance features include timing and voltage margining, BER measurements, RX and TX lane-to-lane skew at the module, and TX equalization. Adapter compliance requires that the golden die adapter has the capability to inject consistent and inconsistent sideband messages to test the DUT for a variety of error scenarios. The DUT must also have control and status registers for injecting test or NOP flits, injecting link state requests or response sideband messages, and retry injection control.

A compliance platform is being introduced with proposed phases to set up compliance testing. The compliance setup will consist of a golden UCIe die reference, with all layers of the UCIe stack to be tested with the reference DUT design. The DUT should have cleared die sort and pre-bond testing.

There is a discussion of the pros and cons of containing all functionality in the golden die or enabling flexibility by separating items into an FPGA at the platform level. The FPGA could have a potential use case as a traffic or pattern generator in addition to other connections or use cases to enable functional flexibility. JTAG test ports will be a requirement on all golden die, DUT, and chiplets. The protocol support will depend on the type of traffic to be injected. Initial protocol integration is to include streaming in raw mode but adding PCIe/CXL protocol support may still require further discussion. In parallel, company interest from a group of vendors to volunteer to create the golden die is still being gauged.

The golden die could be a flexible or a fixed form factor. Topics of discussion also include giving flexibility to the DUT form factor, or the part of the package where the DUT sits. This would require a new package for any new DUT that arrives but would be paid for by the UCIe vendor seeking compliance testing. Whether to keep the golden die separate for the advanced and standard packages or build one combined all-in-one golden die is up for debate over cost and effort. Keeping them separate would make the most sense because of the complexity of creating a single golden die that covers all the variables of advanced and standard UCIe. A future path for this is still potentially optimistic of merging everything into one golden die; however, current plans are to proceed with a separate golden die for the standard package vs the advanced package.

The Role of Pre-Silicon Interoperability

The UCIe IP is a multi-layered full-subsystem solution that includes a PHY IP, a D2D adapter, and an optional protocol or bridge layer to provide a standard interface to the SoC connection.

The entire system is designed concurrently, resulting in all layers going through design and debug at the same time. However, interoperability tests are not possible without the fabrication and packaging process being completed. This leads to a long feedback cycle time and can take many months. In the entire stack, the only portion dependent on silicon for its interoperability is a sub-section of the PHY called the Electrical/Analog front-end. The remainder of the PHY (the PHY logic) and upper layers (D2D adaptor and protocol stack) can all be tested regardless of the design having gone through silicon fabrication. Since these portions are fully digital, the analog effects of silicon variability do not impact them. The aim of testing these portions is to root out design mistakes, and that can be done by pre-silicon interoperability tests. In addition, layers above the UCIe specification can also be tested, allowing users and UCIe IP designers to implement the “Shift-left” strategy of testing all sections of the design as early as possible without being gated by another section. This provides valuable early feedback, allowing many iterations of design refinement in a much shorter time. The overall time-to-market improves drastically, and the bug rate drops. However, there are challenges in this process, and we present them next.

UCIe Verification Challenges

The interoperability activities present unique challenges to the verification environment. Standard PHY-level verification techniques include loopback testing, back-to-back testing, as well as testing with the Bus Functional Model (BFM). However, in the case of interoperability, it was necessary to design a new environment that would drive the sideband and mainband signals from the vectors of our partner PHY with the appropriate sampling rate.

The approach of using vectors for the Intel PHY TX presented a few challenges in simulation. Primarily, the challenges were related to the sequencing of the sideband packets as the LTSM (Link Training State Machine) advanced to the ACTIVE state. Since the Intel vectors were sending TX sideband messages to the Cadence PHY without any RX from the Cadence PHY, the vectors would often move on to the next LTSM state before the Cadence PHY had a chance to complete the required processes for the current state. In this scenario, the two sides would become out of sync without any possibility of recovery.

To overcome these sequencing challenges, the vector set from Intel was modified to insert delays and replicate certain responses required to handshake with the Cadence PHY.

Additional challenges when working with UCIe vectors as opposed to working with a real UCIe PHY:

UCIe Simulation Logistics

The Cadence UCIe advanced package PHY model with x64 lanes was used for pre-silicon verification with Intel’s UCIe vectors. The UCIe sideband was used for initialization, link training, and messaging between the die links. Parameter information such as speed or link training results with the link partner was exchanged over the sideband. The LTSM (Link Training State Machine) state definitions defined in the UCIe specification were followed at a high level to step through each state for initialization.

Initial Interop

The initial interop environment consisted of a custom BFM module with System Verilog tasks that read the vector files and initiated the sideband and mainband traffic to the Cadence UCIe PHY. There was also a script environment written in TCL that was responsible for starting the traffic from the vectors at the correct time when the Cadence PHY was out-of-reset. Additionally, the script environment enabled the flexibility to insert delays in the vector stream to accommodate certain timing requirements in the Cadence PHY, such as the time it takes for the Cadence PLL to lock during a rate change.

Simulation - Interoperability over UCIe

This white paper focuses on the simulation interoperability of the Cadence IP for the UCIe advanced package. The lack of a platform for interoperability testing served as a challenge to demonstrate that the IP was developed according to the specification as it continued to evolve. Through the simulation of their respective UCIe PHYs, Intel and Cadence were able to flush out several issues with PHY compliance on both sides.

During interoperability testing, the order of checking the PHY lanes was corrected. The testing had revealed an incorrect order in the checking of the lanes. The PHY lane check was adjusted to check the lanes in the following order: TCKP/TCKN/TTRK/TRDCK.

Initial vectors provided for interoperability testing were discovered to be illegally skipping states. All MBINIT substates after MBINIT.CALIB_DONE were skipped. This was corrected with new vector release files.

Regression testing and interoperability between the established die links was an opportunity to improve the robustness of both PHYs and to prove both designs against various areas of the UCIe standard to add product quality enhancements when applicable.

Controller Simulation Interop

Building on the physical layer interop, the next step is to enable controller simulation interoperability.

The Controller, in this case, is a UCIe Streaming controller providing the following services:

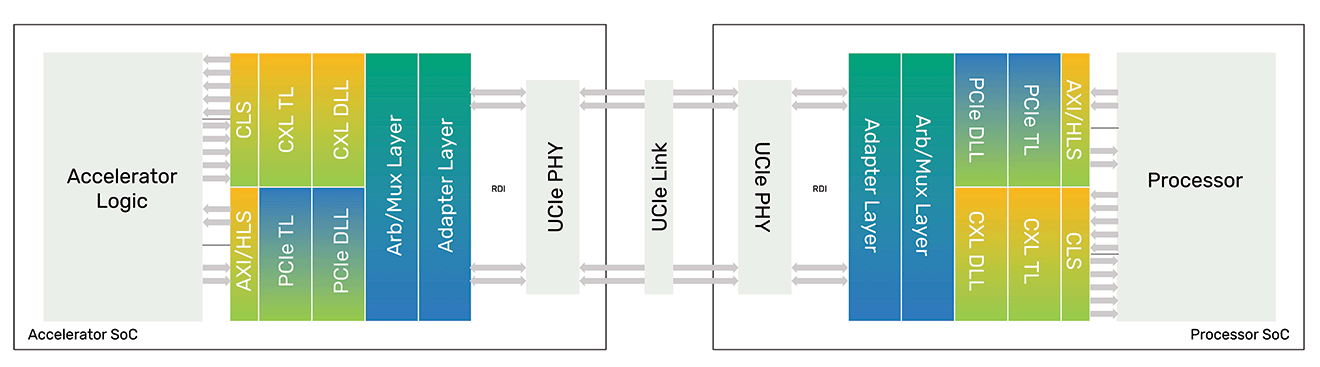

The UCIe Controller connects to the UCIe AP PHY via the RDI defined by the UCIe specification and described in the following diagram.

The UCIe-Streaming Controller and the UCIe PHY integrated solution are the minimum viable solutions allowing a single stack interop of the UCIe Adapter and PHY layers. The following high-level system diagram describes the Controller (Adapter) and PHY modules implemented in both chiplets.

Simulation Interop of the Controller solutions helps to prove the correct interpretation and implementation of new and complex state machines, interfaces, and register spaces defined by the UCIe specification.

The initial PHY interop, described previously, extends only to the RDI interface of the PHY. The inclusion of the controller extends the interop through the full Adapter Layer (Sideband, Mainband, CRC, Retry) to the FDI interface at the host side of the Controller. A UCIe Port can only begin to send data when the RDI interface and the FDI interface are ACTIVE, hence the importance of simulating both the Controller and the PHY.

UCIe Benefits to the Wider Community

The heterogeneous disaggregation of SoCs is imperative in overcoming the challenges of Moore’s Law and opens the door for System-in-Package (SiP), which is expected to revolutionize high-performance system implementations. By standardizing power-efficient, low-latency chiplets, UCIe is expected to be the key enabler of these SiP solutions.

While integrating IPs in different technology nodes within a single package poses many challenges, UCIe provides a blueprint to the engineering community by addressing both advanced and standard packaging solutions and tackling complex form factor, protocol, and electrical problems through detailed specifications and guidelines. Compliance and interoperability are critical parts of enabling seamless integration of the SiP components, and they are currently a developing segment of the UCIe standard. As the UCIe standard evolves, interoperability simulations and testing, as described in this paper, provide crucial information for the advancement of the standard.

Conclusion

The universality of the interconnect is the bedrock of the UCIe specification. Interoperability tests, both pre- and post-silicon further this cause. Pre-silicon interop helps accelerate this progress by parallelizing the development of logical and digital portions of the IP with the analog design.

The interop testing done between the Cadence and Intel UCIe design IPs was a success. Both sides could establish the link and proceed to the ACTIVE state. The interop provided a larger coverage of the design IP and the environment, allowing both sides to optimize their IP and test environment beyond what was possible in a back-to-back configuration of a vendor’s IP. With UCIe specs being actively defined and updated, such interop activities provide opportunities to unearth and resolve differences that are otherwise not revealed. This allows for greater confidence in the solution and the universal nature of the chiplet interconnect. Overall, the interop activities provided great value to both Cadence and Intel and created a foundation for more detailed interoperability testing in the future.

In conclusion, as the UCIe specification continues to evolve, there is a drive to set up an open standard ecosystem to enable design IP, verification IP, and testing practices for compliance. To keep up with the rapid pace of the chiplet ecosystem expansion, simulation, and interoperability testing between different sources of UCIe IP is essential. As in the case of Intel and Cadence described in this paper, the interoperability testing and simulation help to quickly and confidently validate the UCIe design IP, leading to a more robust solution.